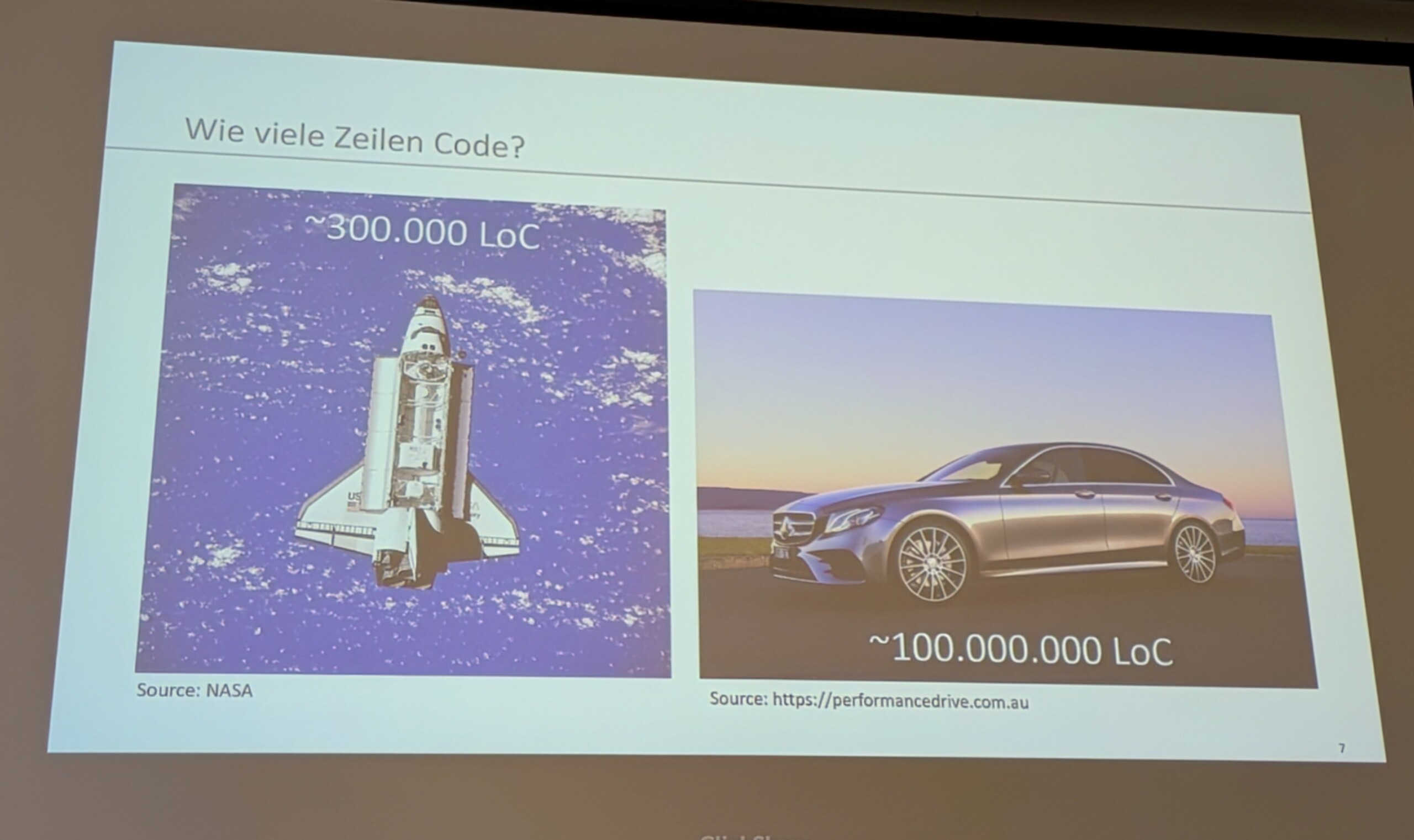

Artificial intelligence (AI) improves the vulnerability analyses of IT systems, networks and applications and also detects threats to security. However, new variants of malware are constantly being developed using AI. In 2018 alone, around 250 million new types of malware were identified, according to Mathias Fischer, Professor of Computer Networks at the University of Hamburg. Meanwhile, programming is becoming increasingly complex. Cars in the 21st century are based on 100 million lines of code (LoC). "300,000 LoC would be enough to get the space shuttle off the ground," said Fischer. Every additional line can become a new gateway for hackers, he warned. "As the number of LoCs increases, so does the probability of vulnerable security"

Knowledge transfer from universities can help companies to grow and develop clever ideas that stimulate entrepreneurship. Research can boost competitiveness, and scientific findings can resolve all the most pressing issues. This is the vein of the "Business meets Science" event series, organised by the Chamber of Commerce and the Hamburg Innovation Contact Centre. During these events, academics from various universities present their latest research findings e.g., on drones, sustainability and digitalisation. This comes after cyber attacks caused €179 billion in economic damage across Germany in 2024 and must be on the economic agenda, according to Katharina Thomsen, head of the Technology, Knowledge and IT in the Chamber of Commerce's Innovation and New Markets division. “Cyber security is as much a part of everyday life as brushing your teeth. Neglecting it will have painful repercussions," she added.

AI as a curse and a blessing

Identify yourself!

Cyber criminals are constantly using phishing emails, dangerous downloads and other startling means to access computer systems without authorisation. “However, clearly identified systems can be made hacker-proof,” said Volker Skwarek, Professor of Computer Engineering and Head of the Cybersec Research and Transfer Centre (FTZ) at the Hamburg University of Applied Sciences (HAW Hamburg). Sounds simple, but nothing could be further from the truth! Although digital identities ensure that only authorised users can access certain systems, this protective measure is undermined in everyday life. An employee, for instance, can request a new printer that connects without being appropriately authenticated. Network devices with their own software, storage functions, and remote access options are ideal gateways for hackers. And handling peripheral devices carelessly can swiftly become vulnerable and perhaps prove the weakest link in a company's security concept. Commenting on carelessness, Skwarek warned: “If you want to be on the safe side, never scan a QR code. You never know where the code leads to.”

Coding with LLMs - easy or dangerous?

Large language models (LLMs) such as GPT, Mistral and Llama can easily generate and optimise code. "Prompt-driven programming enables developers to generate code based on natural language," said Professor Riccardo Scandariato, Head of the Institute for Software Security at the Hamburg University of Technology. But how secure is code created autonomously? LLMs can generate faulty code, if the prompts are unclear or badly phrased. Prompt injections, whereby hackers secretly infiltrate communication with LLMs and influence the code, pose a real danger. "The right prompt engineering can significantly improve security," said Scandariato, referring to Recursive Criticism and Improvement (RCI). During the multi-stage prompting process, the code is checked constantly checked and improved in repetitive loops, in terms of both functionality and security.

ys/pb

Sources and further information

More

Similar articles

Cybersecurity - "hackers not black swans"

Ministry of Science announces "Calls for Transfer" scheme

Cybersecurity in Hamburg more important amid rising attacks